本地部署Whisper实现语言转文字

文章目录

- 本地部署Whisper实现语言转文字

- 1.前置条件

- 2.安装chocolatey

- 3.安装ffmpeg

- 4.安装whisper

- 5.测试用例

- 6.命令行用法

- 7.本地硬件受限,借用hugging face资源进行转译

本地部署Whisper实现语言转文字

1.前置条件

环境windows10 64位

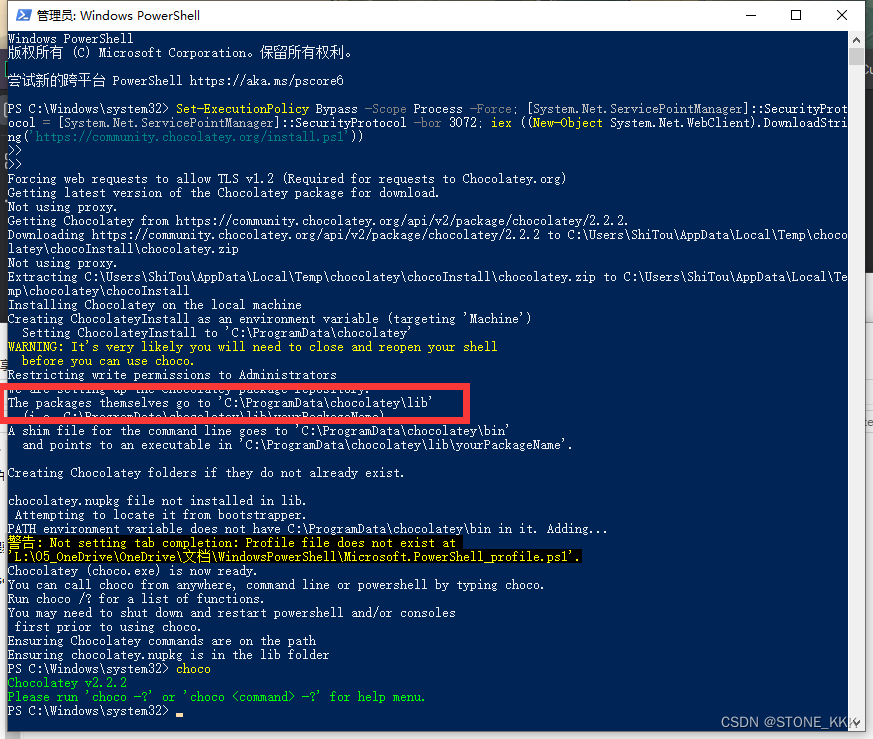

2.安装chocolatey

安装chocolatey目的是安装ffpeg

以管理员身份运行PowerShell

粘贴命令

Set-ExecutionPolicy Bypass -Scope Process -Force; [System.Net.ServicePointManager]::SecurityProtocol = [System.Net.ServicePointManager]::SecurityProtocol -bor 3072; iex ((New-Object System.Net.WebClient).DownloadString('https://community.chocolatey.org/install.ps1'))安装成功打入choco

安装文件夹路径

C:\ProgramData\chocolatey

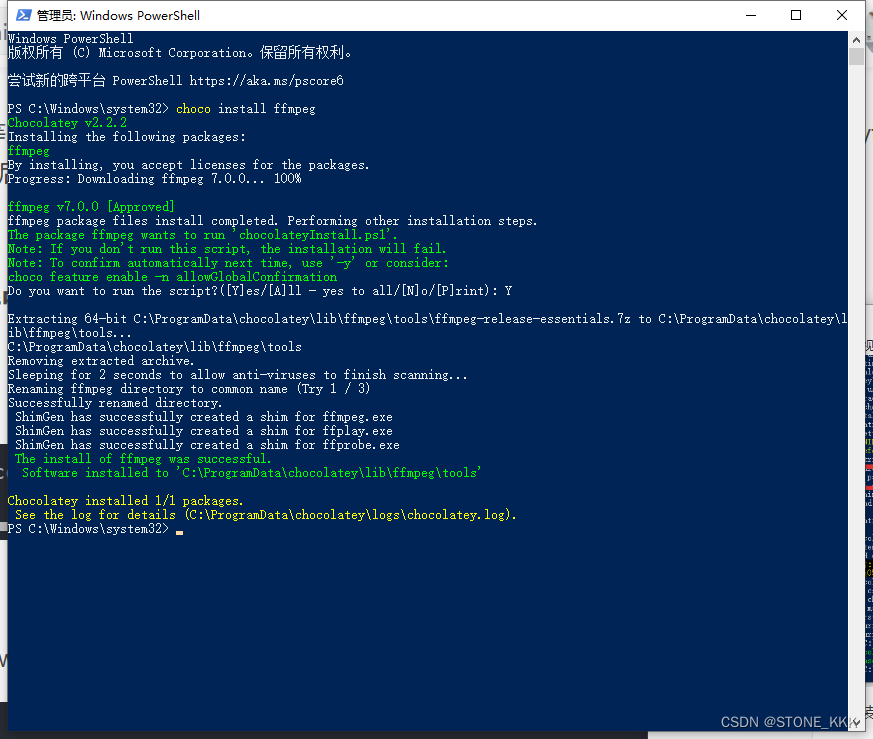

3.安装ffmpeg

choco install ffmpeg

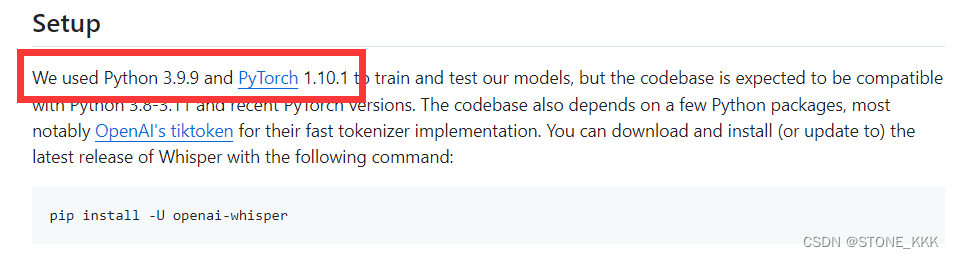

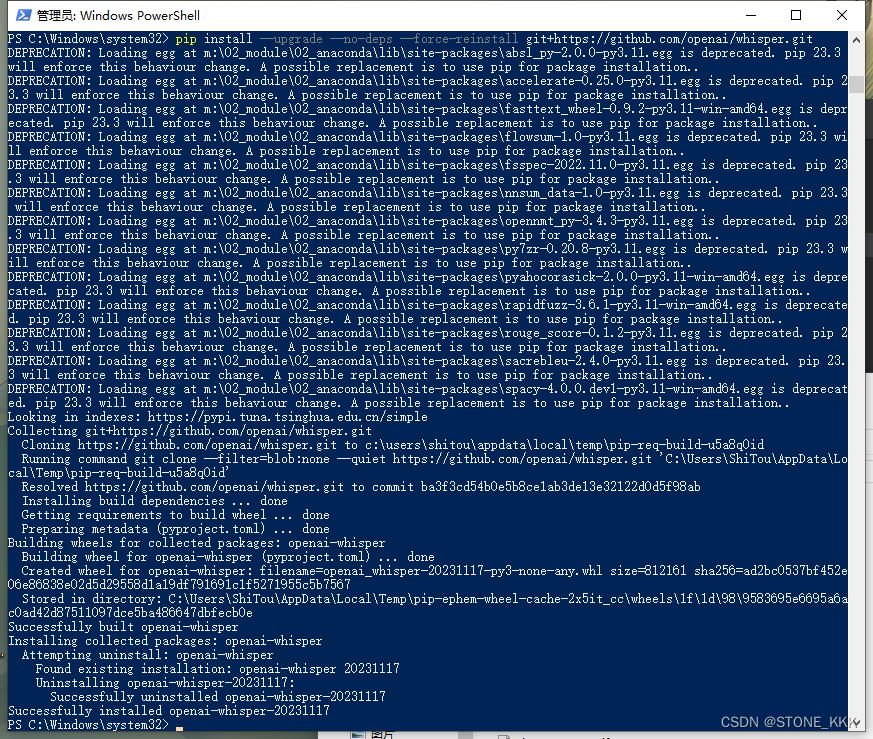

4.安装whisper

pip install git+https://github.com/openai/whisper.git

安装完成运行

pip install --upgrade --no-deps --force-reinstall git+https://github.com/openai/whisper.git

安装完成

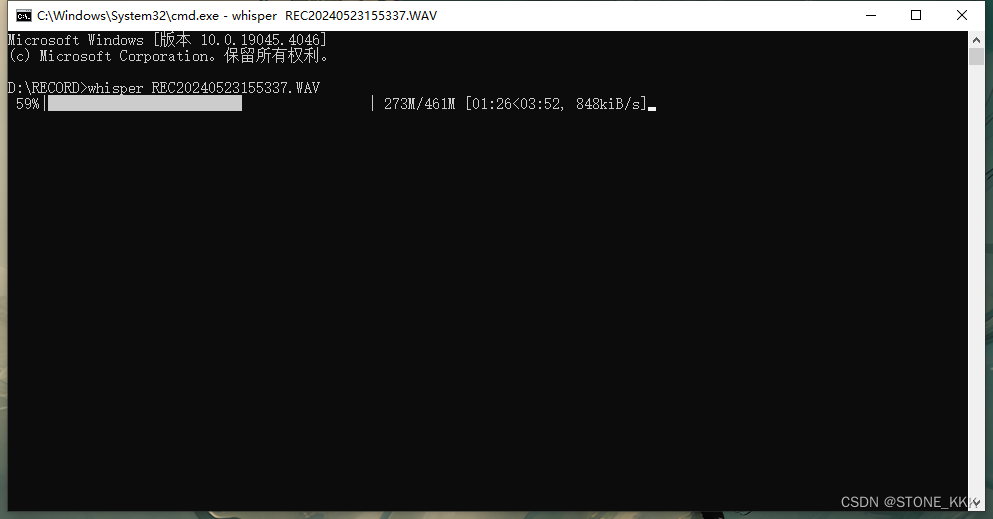

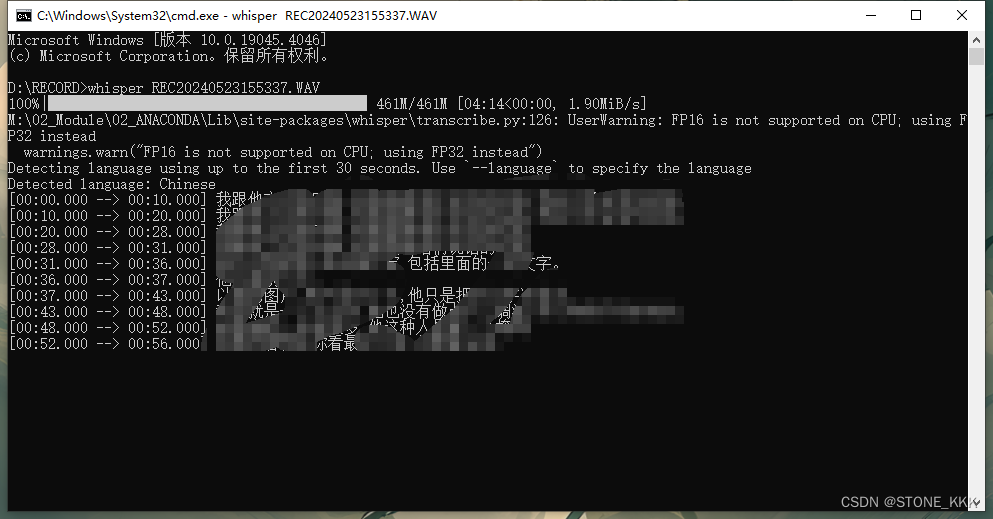

5.测试用例

直接命令行

whisper yoump3.mp3

6.命令行用法

以下命令将使用medium模型转录音频文件中的语音:

whisper audio.flac audio.mp3 audio.wav --model medium

默认设置(选择模型small)非常适合转录英语。要转录包含非英语语音的音频文件,您可以使用以下选项指定语言--language:

whisper japanese.wav --language Japanese

添加--task translate后将把演讲翻译成英文:

whisper japanese.wav --language Japanese --task translate

运行以下命令查看所有可用选项:

whisper --help

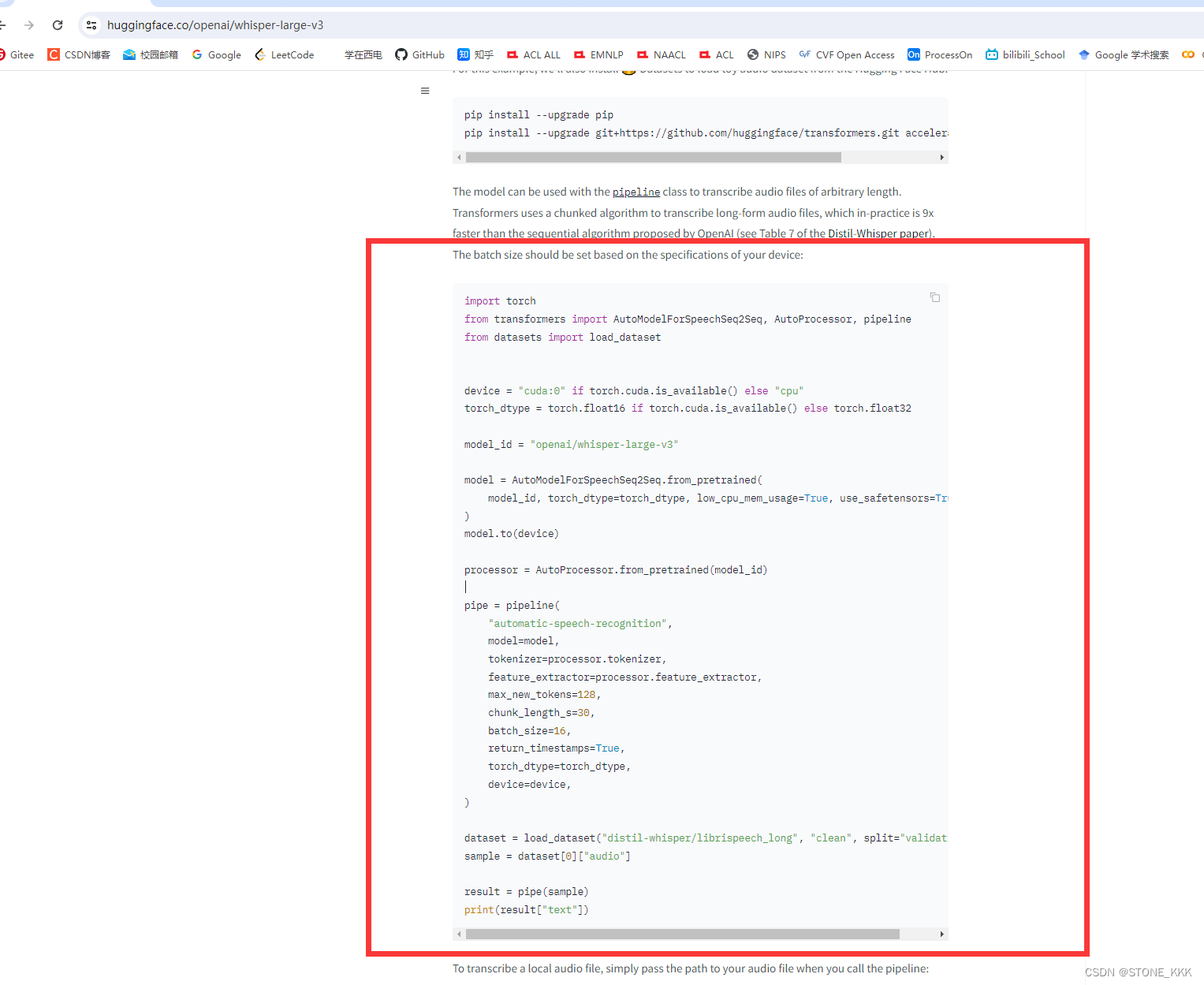

7.本地硬件受限,借用hugging face资源进行转译

进入huggingface网址,往下拉

https://huggingface.co/openai/whisper-large-v3

粘贴上述代码

import torch from transformers import AutoModelForSpeechSeq2Seq, AutoProcessor, pipeline from datasets import load_dataset device = "cuda:0" if torch.cuda.is_available() else "cpu" torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32 model_id = "openai/whisper-large-v3" model = AutoModelForSpeechSeq2Seq.from_pretrained( model_id, torch_dtype=torch_dtype, low_cpu_mem_usage=True, use_safetensors=True ) model.to(device) processor = AutoProcessor.from_pretrained(model_id) pipe = pipeline( "automatic-speech-recognition", model=model, tokenizer=processor.tokenizer, feature_extractor=processor.feature_extractor, max_new_tokens=128, chunk_length_s=30, batch_size=16, return_timestamps=True, torch_dtype=torch_dtype, device=device, ) dataset = load_dataset("distil-whisper/librispeech_long", "clean", split="validation") sample = dataset[0]["audio"] result = pipe(sample) print(result["text"])修改本地代码,将sample修改为,需要转录的录音,接入代理;

import torch from transformers import AutoModelForSpeechSeq2Seq, AutoProcessor, pipeline from datasets import load_dataset import os os.environ['CURL_CA_BUNDLE'] = '' os.environ["http_proxy"] = "http://127.0.0.1:7890" os.environ["https_proxy"] = "http://127.0.0.1:7890" device = "cuda:0" if torch.cuda.is_available() else "cpu" torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32 model_id = "openai/whisper-large-v3" model = AutoModelForSpeechSeq2Seq.from_pretrained( model_id, torch_dtype=torch_dtype, low_cpu_mem_usage=True, use_safetensors=True ) model.to(device) processor = AutoProcessor.from_pretrained(model_id) pipe = pipeline( "automatic-speech-recognition", model=model, tokenizer=processor.tokenizer, feature_extractor=processor.feature_extractor, max_new_tokens=128, chunk_length_s=30, batch_size=16, return_timestamps=True, torch_dtype=torch_dtype, device=device, ) dataset = load_dataset("distil-whisper/librispeech_long", "clean", split="validation") sample = dataset[0]["audio"] result = pipe("myaudio") print(result["text"])借用huggingface的速度,速度取决于网速

免责声明:我们致力于保护作者版权,注重分享,被刊用文章因无法核实真实出处,未能及时与作者取得联系,或有版权异议的,请联系管理员,我们会立即处理!

部分文章是来自自研大数据AI进行生成,内容摘自(百度百科,百度知道,头条百科,中国民法典,刑法,牛津词典,新华词典,汉语词典,国家院校,科普平台)等数据,内容仅供学习参考,不准确地方联系删除处理!

图片声明:本站部分配图来自人工智能系统AI生成,觅知网授权图片,PxHere摄影无版权图库和百度,360,搜狗等多加搜索引擎自动关键词搜索配图,如有侵权的图片,请第一时间联系我们,邮箱:ciyunidc@ciyunshuju.com。本站只作为美观性配图使用,无任何非法侵犯第三方意图,一切解释权归图片著作权方,本站不承担任何责任。如有恶意碰瓷者,必当奉陪到底严惩不贷!