基于WebAssembly无插件解码H264/H265码流播放器

基于WebAssembly无插件解码H264/H265码流播放器

之前看到一篇文章:web无插件解码播放H264/H265(WebAssembly解码HTML5播放)

H.265/HEVC在Web视频播放的实践

这里写目录标题

- 一.emsdk工具的安装

- 二.emsdk编译ffmpeg

- 三.ffmpeg解码库

- 四.执行Makefile.sh脚本,生成ffmpeg.js ffmpeg.wasm解码库

- 五.实现websocket协议服务器,推送码流

- 六.web端实现

- 七.结果

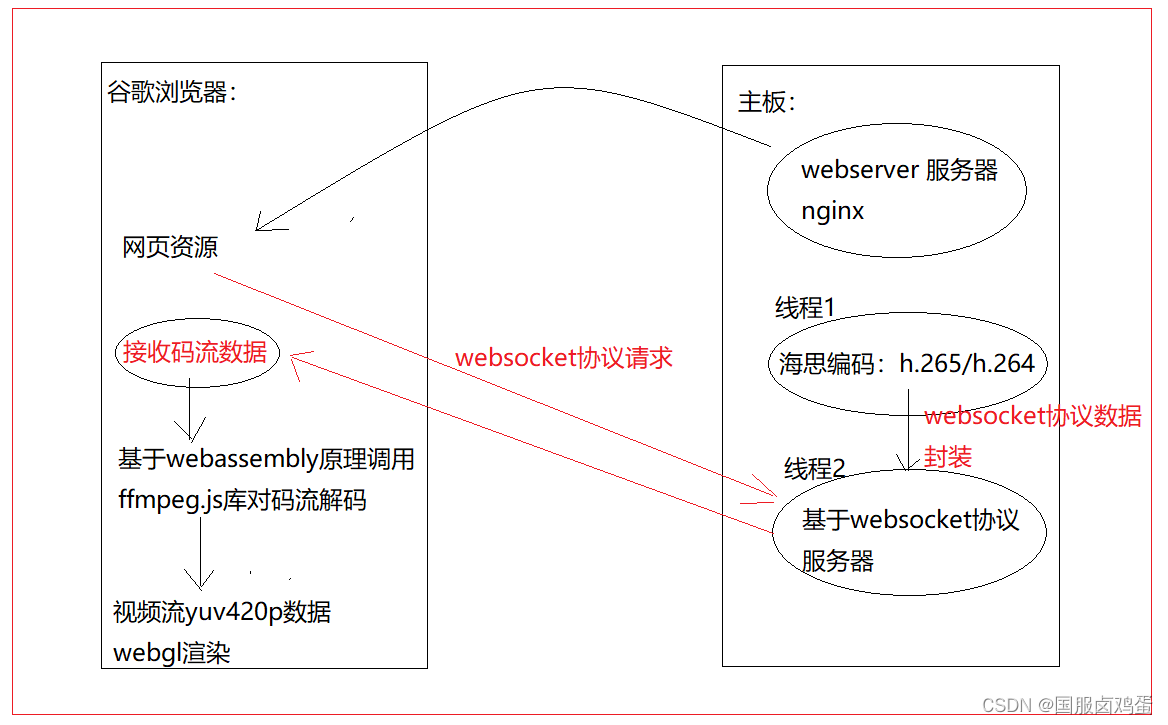

按照文章思路,已经复现了web端无插件解码H265码流。首先说明下我这边的环境,框架。

在海思主板上移植了web服务器nginx,用于pc端请求网页资源,然后用了两个线程,一个线程海思编码,一个线程websocket协议服务器推送H265码流到web端,web端收到了码流数据,调用ffmpeg编译好的js库软解码H265数据,webgl渲染yuv数据。

一.emsdk工具的安装

https://www.cnblogs.com/tonghaolang/p/9253719.html Windows下安装enscripten

Windows 和Linux 安装 emsdk

https://blog.csdn.net/qq_34754747/article/details/103815349?depth_1-utm_source=distribute.pc_relevant.none-task-blog-BlogCommendFromBaidu-11&utm_source=distribute.pc_relevant.none-task-blog-BlogCommendFromBaidu-11

安装emsdk工具

wget https://s3.amazonaws.com/mozilla-games/emscripten/releases/emsdk-portable.tar.gz

二.emsdk编译ffmpeg

下载ffmpeg源码,用下面命令在linux平台编译得到ffmpeg静态库文件。

emconfigure ./configure --cc="emcc" --prefix=/opt/tar-download/emccffmpeg \ --enable-cross-compile --target-os=none --arch=x86_32 --cpu=generic --disable-ffplay --disable-ffprobe \ --disable-asm --disable-doc --disable-devices --disable-pthreads --disable-w32threads --disable-network \ --disable-hwaccels --disable-parsers --disable-bsfs --disable-debug --disable-protocols --disable-indevs \ --disable-outdevs --enable-protocol=file --enable-decoder=hevc --enable-decoder=h264

三.ffmpeg解码库

ffmpeg.h

#ifndef _FFMPEG_H_ #define _FFMPEG_H_ #include #include #include #include #include #include #include #include "libswscale/swscale.h" #include "libavutil/opt.h" #include "libavutil/imgutils.h" #include "libavcodec/avcodec.h" #define QUEUE_SIZE 20 //图片数据 typedef struct _imgdata{ int width; int height; uint8_t* data; }Imgdata; //解码API extern int init_decoder(int codeid); //初始化解码器 extern Imgdata* decoder_raw(char* framedata, int framesize ); //解码 extern uint8_t* process(AVFrame* frame, uint8_t* buff); //yuvdata 保存在buff中 extern void free_decoder(); //关闭解码器 #endifffmpeg.c

#include "ffmpeg.h" AVCodec* codec; //记录编码详细信息 AVCodecParserContext* parser = NULL; AVCodecContext* c = NULL; //记录码流编码信息 AVPacket* pkt = NULL; AVFrame* frame = NULL; //存储视频中的帧 Imgdata *imgbuff = NULL; static int codeId = 0; /********************************************************** * 功能: 初始化解码器 * 参数: codeid---> 1=打开h.265解码器 * 0=打开h.264解码器 * * 返回值:0=成功初始化 * -1=初始化失败 * * *******************************************************/ int init_decoder(int codeid) { codeId = codeid; switch(codeid) { case 0:{ codec = avcodec_find_decoder(AV_CODEC_ID_H264); break; } case 1:{ codec = avcodec_find_decoder(AV_CODEC_ID_H265); break; } default:{ printf("In C language no choose h.264/h.265 decoder\n"); return -1; } } if(NULL == codec) { printf("codec find decoder failed\n"); return -1; } parser = av_parser_init(codec->id); if(NULL == parser) { printf("can't jsparser init!\n"); return -1; } c = avcodec_alloc_context3(codec); if(NULL == c) { printf("can't allocate video jscodec context!\n"); return -1; } if(avcodec_open2(c, codec, NULL) 裸流数据 * framesize---->裸流长度 * * 返回值:Imgdata的结构体 Imgdata->width 视频宽度 * Imgdata->height 视频高度 * Imgdata->data yuv420p数据 * * *******************************************************/ Imgdata* decoder_raw(char* framedata, int framesize ) { if(NULL == framedata || framesize printf("In C language framedata is null or framesize is zero\n"); return NULL; } /***************************************************** * 过滤,第一次检测首帧是否是VPS/SPS,若不是跳过不解码 第一次检测到首帧是VPS/SPS,后面不用检测,flag=0 -- flag=1 * * **************************************************/ int nut = 0; static int flag = 0; if(codeId == 0) //裸流h.264 { nut = (char)framedata[4]& 0x1f; while(!flag) { if( 7==nut ){ //PPS flag = 1; break; } else if( 8==nut )//PPS { return NULL; } else if( 5==nut )//I { return NULL; } else if( 1==nut )//P { return NULL; } else if( 9==nut )//AUD { return NULL; } else if( 6==nut )//SEI { return NULL; } else//Unknown { return NULL; } } } else { //h.265裸流 nut = (char)(framedata[4]>>1) &0x3f; while(!flag) { if(nut == 32)//VPS { flag = 1; break; } else if(nut == 33)//SPS { return NULL; } else if(nut == 34)//PPS { return NULL; } else if(nut == 19)//I帧 { return NULL; } else if(nut == 1)//P帧 { return NULL; } else if(nut == 35)//AUD { return NULL; } else if(nut == 39)//SEI { return NULL; } else //Unknown { return NULL; } } } //开始解码 int ret = 0; int size = 0; while(framesize > 0) { size = av_parser_parse2(parser, c, &pkt->data, &pkt->size, (const uint8_t *)framedata, framesize, AV_NOPTS_VALUE, AV_NOPTS_VALUE, AV_NOPTS_VALUE); if(size size) { ret = avcodec_send_packet(c, pkt); if (ret =0 ) { ret = avcodec_receive_frame(c, frame); if(ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) { break; } else if(ret width, frame->height, 1); uint8_t *buff = (uint8_t*)malloc(yuvBytes); if(buff == NULL) { printf("malloc yuvBytes failed!\n"); return NULL; } imgbuff->width = frame->width; imgbuff->height = frame->height; imgbuff->data = process(frame, buff); free(buff); } } } } return imgbuff; } /****************************************************** * 功能: 将一帧yuvdata 保存为yuv buff * 参数: frame---->yuvframe * buff----->保存数据buff * * 返回值: buff yuv420p数据 * ***************************************************/ uint8_t* process(AVFrame* frame, uint8_t* buff) { int i, j, k; for(i=0; iheight; i++) { memcpy(buff + frame->width*i, frame->data[0]+frame->linesize[0]*i, frame->width); } for(j=0; jheight/2; j++) { memcpy(buff + frame->width*i + frame->width/2*j, frame->data[1] + frame->linesize[1]*j, frame->width/2); } for(k=0; kheight/2; k++) { memcpy(buff + frame->width*i +frame->width/2*j + frame->width/2*k, frame->data[2]+frame->linesize[2]*k, frame->width/2); } return buff; } /***************************************************** * 功能:关闭解码器,释放内存 * 参数: * * 返回值:无 * **************************************************/ void free_decoder() { av_parser_close(parser); avcodec_free_context(&c); av_frame_free(&frame); av_packet_free(&pkt); if(imgbuff !=NULL) { if(imgbuff->data != NULL) { free(imgbuff->data); imgbuff->data = NULL; } free(imgbuff); imgbuff = NULL; } printf("In C language free all requested memory! \n"); }test.c

#include #include "ffmpeg.h" int main(int argc, const char **argv) { return 0; }四.执行Makefile.sh脚本,生成ffmpeg.js ffmpeg.wasm解码库

Makefile.sh

echo "start building" export TOTAL_MEMORY=67108864 export EXPORTED_FUNCTIONS="[ \ '_init_decoder', \ '_decoder_raw', \ '_free_decoder', \ '_main' ]" emcc test.c ffmpeg.c ../lib/libavcodec.a ../lib/libavutil.a ../lib/libswscale.a \ -O2 \ -I "../inc" \ -s WASM=1 \ -s USE_PTHREADS=1 \ -s TOTAL_MEMORY=${TOTAL_MEMORY} \ -s EXPORTED_FUNCTIONS="${EXPORTED_FUNCTIONS}" \ -s EXTRA_EXPORTED_RUNTIME_METHODS="['addFunction']" \ -s RESERVED_FUNCTION_POINTERS=14 \ -s FORCE_FILESYSTEM=1 \ -o ../js/libffmpeg.js echo "finish building"五.实现websocket协议服务器,推送码流

参考文章:WebSocket的C++服务器端实现

WebSocket协议解析

/******************************************************************************* * 名称: server_thread_fun * 功能: 线程管理websocket服务器 * 形参: arg: WebSocket_server 结构体 * * * 返回: 无 * 说明: 无 ******************************************************************************/ void server_thread_fun(void *arg) { int ret, j; int cfd; char ip[16] = ""; struct sockaddr_in serverAddr; struct sockaddr_in clientAddr; socklen_t client_len = sizeof(clientAddr); WebSocket_server *wss = (WebSocket_server *)arg; memset(&serverAddr, 0, sizeof(serverAddr)); serverAddr.sin_family = AF_INET; serverAddr.sin_port = htons(wss->port); serverAddr.sin_addr.s_addr = INADDR_ANY; wss->fd = socket(AF_INET, SOCK_STREAM, 0); if(wss->fd fd, SOL_SOCKET, SO_REUSEADDR, &opt, sizeof(opt)); bind(wss->fd, (struct sockaddr*)&serverAddr, sizeof(serverAddr)); listen(wss->fd, 128); //创建一个句柄 int epfd = epoll_create(1); if(epfd fd; ev.events = EPOLLIN | EPOLLET; epoll_ctl(epfd, EPOLL_CTL_ADD, wss->fd, &ev); while(1) { int n = epoll_wait(epfd, evs, 1024, -1); //epoll监听 if( n fd) //接入客户端 { cfd = accept(wss->fd, (struct sockaddr *)&clientAddr, &client_len); //提取新的连接 if(cfd >=0 ) { ev.data.fd = cfd; epoll_ctl(epfd, EPOLL_CTL_ADD, cfd, &ev); } //printf("websocketV2 client ip=%s port=%d fd=%d\n",inet_ntop(AF_INET, &clientAddr.sin_addr.s_addr, ip, 16),ntohs(clientAddr.sin_port),cfd); } else if(evs[j].events & EPOLLIN)//接收数据事件 { memset(wss->buf, 0, sizeof(wss->buf)); ret = wss->callback(wss, evs[j].data.fd, wss->buf, sizeof(wss->buf));//回调函数 if(ret if( errno 0 && (errno == EAGAIN || errno == EINTR)) ; else { //printf("accept close=%d errno =%d\n",evs[j].data.fd,errno); ev.data.fd = evs[j].data.fd; if(epoll_ctl(epfd, EPOLL_CTL_DEL, evs[j].data.fd, &ev) fd); } /******************************************************************************* * 名称: server_thread_fun * 功能: 回调函数 * 形参: wss:WebSocket_server结构体 * fd:句柄 * buf:发送数据buf * buflen: 数据大小 * * 返回: 无 * 说明: 无 ******************************************************************************/ int ret1=0; int server_callback(WebSocket_server *wss, int fd, char *buf, unsigned int buflen) { ret1 = webSocket_recv(fd, buf, buflen, NULL); //接收客户端发过来的数据 if(ret1 > 0) { #if 0 printf(" client->server recv msg fd=%d mgs=%s\n", fd, buf); if(strstr(buf, "hi~") != NULL) ret1 = webSocket_send(fd, "I am server", strlen("I am server"), false, WDT_TXTDATA); #endif } else if( ret1 0) { arrayAddItem(wss->client_fd_array,EPOLL_RESPOND_NUM, fd );//连接成功后将fd添加到数组中 } } } return ret1; } /******************************************************************************* * 名称: arrayAddItem * 功能: 添加cfd到数组 * 形参: array:二维数组 * arraySize: 句柄数量 * value: cfd * 返回: * 说明: 无 ******************************************************************************/ int arrayAddItem(int array[][2], int arraySize, int value) { int i; for(i=0; i if(array[i][1] ==0 ) { array[i][0] = value; array[i][1] = 1; return 0; } } return -1; } /******************************************************************************* * 名称: arrayRemoveItem * 功能: 从数组中删除cfd * 形参: array:二维数组 * arraySize: 句柄数量 * value: cfd * 返回: * 说明: 无 ******************************************************************************/ int arrayRemoveItem(int array[][2], int arraySize, int value) { int i = 0; for(i=0; i if(array[i][0] == value) { array[i][0] = 0; array[i][1] = 0; return 0; } } return -1; } this.gl = gl; this.texture = gl.createTexture(); gl.bindTexture(gl.TEXTURE_2D, this.texture); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.LINEAR); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.LINEAR); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE); } Texture.prototype.bind = function (n, program, name) { var gl = this.gl; gl.activeTexture([gl.TEXTURE0, gl.TEXTURE1, gl.TEXTURE2][n]); gl.bindTexture(gl.TEXTURE_2D, this.texture); gl.uniform1i(gl.getUniformLocation(program, name), n); }; Texture.prototype.fill = function (width, height, data) { var gl = this.gl; gl.bindTexture(gl.TEXTURE_2D, this.texture); gl.texImage2D(gl.TEXTURE_2D, 0, gl.LUMINANCE, width, height, 0, gl.LUMINANCE, gl.UNSIGNED_BYTE, data); }; function WebGLPlayer(canvas, options) { this.canvas = canvas; this.gl = canvas.getContext("webgl") || canvas.getContext("experimental-webgl"); this.initGL(options); } WebGLPlayer.prototype.initGL = function (options) { if (!this.gl) { console.log("[ER] WebGL not supported."); return; } var gl = this.gl; gl.pixelStorei(gl.UNPACK_ALIGNMENT, 1); var program = gl.createProgram(); var vertexShaderSource = [ "attribute highp vec4 aVertexPosition;", "attribute vec2 aTextureCoord;", "varying highp vec2 vTextureCoord;", "void main(void) {", " gl_Position = aVertexPosition;", " vTextureCoord = aTextureCoord;", "}" ].join("\n"); var vertexShader = gl.createShader(gl.VERTEX_SHADER); gl.shaderSource(vertexShader, vertexShaderSource); gl.compileShader(vertexShader); var fragmentShaderSource = [ "precision highp float;", "varying lowp vec2 vTextureCoord;", "uniform sampler2D YTexture;", "uniform sampler2D UTexture;", "uniform sampler2D VTexture;", "const mat4 YUV2RGB = mat4", "(", " 1.1643828125, 0, 1.59602734375, -.87078515625,", " 1.1643828125, -.39176171875, -.81296875, .52959375,", " 1.1643828125, 2.017234375, 0, -1.081390625,", " 0, 0, 0, 1", ");", "void main(void) {", " gl_FragColor = vec4( texture2D(YTexture, vTextureCoord).x, texture2D(UTexture, vTextureCoord).x, texture2D(VTexture, vTextureCoord).x, 1) * YUV2RGB;", "}" ].join("\n"); var fragmentShader = gl.createShader(gl.FRAGMENT_SHADER); gl.shaderSource(fragmentShader, fragmentShaderSource); gl.compileShader(fragmentShader); gl.attachShader(program, vertexShader); gl.attachShader(program, fragmentShader); gl.linkProgram(program); gl.useProgram(program); if (!gl.getProgramParameter(program, gl.LINK_STATUS)) { console.log("[ER] Shader link failed."); } var vertexPositionAttribute = gl.getAttribLocation(program, "aVertexPosition"); gl.enableVertexAttribArray(vertexPositionAttribute); var textureCoordAttribute = gl.getAttribLocation(program, "aTextureCoord"); gl.enableVertexAttribArray(textureCoordAttribute); var verticesBuffer = gl.createBuffer(); gl.bindBuffer(gl.ARRAY_BUFFER, verticesBuffer); gl.bufferData(gl.ARRAY_BUFFER, new Float32Array([1.0, 1.0, 0.0, -1.0, 1.0, 0.0, 1.0, -1.0, 0.0, -1.0, -1.0, 0.0]), gl.STATIC_DRAW); gl.vertexAttribPointer(vertexPositionAttribute, 3, gl.FLOAT, false, 0, 0); var texCoordBuffer = gl.createBuffer(); gl.bindBuffer(gl.ARRAY_BUFFER, texCoordBuffer); gl.bufferData(gl.ARRAY_BUFFER, new Float32Array([1.0, 0.0, 0.0, 0.0, 1.0, 1.0, 0.0, 1.0]), gl.STATIC_DRAW); gl.vertexAttribPointer(textureCoordAttribute, 2, gl.FLOAT, false, 0, 0); gl.y = new Texture(gl); gl.u = new Texture(gl); gl.v = new Texture(gl); gl.y.bind(0, program, "YTexture"); gl.u.bind(1, program, "UTexture"); gl.v.bind(2, program, "VTexture"); } WebGLPlayer.prototype.renderFrame = function (videoFrame, width, height, uOffset, vOffset) { if (!this.gl) { console.log("[ER] Render frame failed due to WebGL not supported."); return; } var gl = this.gl; gl.viewport(0, 0, gl.canvas.width, gl.canvas.height); gl.clearColor(0.0, 0.0, 0.0, 0.0); gl.clear(gl.COLOR_BUFFER_BIT); gl.y.fill(width, height, videoFrame.subarray(0, uOffset)); gl.u.fill(width 1, height >> 1, videoFrame.subarray(uOffset, uOffset + vOffset)); gl.v.fill(width >> 1, height >> 1, videoFrame.subarray(uOffset + vOffset, videoFrame.length)); gl.drawArrays(gl.TRIANGLE_STRIP, 0, 4); }; WebGLPlayer.prototype.fullscreen = function () { var canvas = this.canvas; if (canvas.RequestFullScreen) { canvas.RequestFullScreen(); } else if (canvas.webkitRequestFullScreen) { canvas.webkitRequestFullScreen(); } else if (canvas.mozRequestFullScreen) { canvas.mozRequestFullScreen(); } else if (canvas.msRequestFullscreen) { canvas.msRequestFullscreen(); } else { alert("This browser doesn't supporter fullscreen"); } }; WebGLPlayer.prototype.exitfullscreen = function (){ if (document.exitFullscreen) { document.exitFullscreen(); } else if (document.webkitExitFullscreen) { document.webkitExitFullscreen(); } else if (document.mozCancelFullScreen) { document.mozCancelFullScreen(); } else if (document.msExitFullscreen) { document.msExitFullscreen(); } else { alert("Exit fullscreen doesn't work"); } }video.js //websocket接收端

var canvas; var webglPlayer; var width = 0; var height = 0; var socketurl = "ws://192.168.1.213:8000"; //websocket服务器ip,port //codeid=1,选择解码H.265裸流 function playH265() { play(1); } //codeid=0,选择解码H.264裸流 function playH264() { play(0); } function play(codeid) { //创建websocket对象 var websocket = new WebSocket(socketurl); websocket.binaryType = "arraybuffer"; //设置客户端接收数据格式 //浏览器-->websocket服务器 发送数据建立连接 websocket.onopen = function() { //判断连接服务器状态 if(websocket.readyState == 0){ alert("browser connect websocket server failed,please reconnect"); return; } else if(websocket.readyState == 1){ console.log("In javascript language connect websocket server success!"); } websocket.send("connect websocket server");//向服务器发送数据 //初始化解码器 1=h265 0=h264 var ret = Module._init_decoder(codeid); if(ret != 0) { console.log("init decoder failed!"); return ; } }; //接收websocket服务器数据 let ptr; websocket.onmessage = function(evt) { let buff = null; let size = 0; size = evt.data.byteLength; buff = new Uint8Array(evt.data); //evt.data里包含裸流数据以ArrayBuffer对象形式存放 let buf = Module._malloc(size); //手机端不支持这样使用函数,webAssembly浏览器原因不兼容 Module.HEAPU8.set(buff, buf); //buf是裸流数据 ptr = Module._decoder_raw(buf, size); //ffmpeg解码 width = Module.HEAPU32[ptr/4]; //Webgl渲染yuv420p数据 height = Module.HEAPU32[ptr/4 +1]; let imgbuffptr = Module.HEAPU32[ptr/4 +2]; let imgbuff = Module.HEAPU8.subarray(imgbuffptr, imgbuffptr + width*height*3/2); showYuv420pdata(width, height, imgbuff); if(buf!=null) //释放内存 { Module._free(buf); buf=null; } }; //websocket关闭连接 websocket.onclose = function() { //关闭解码器,释放内存 Module._free_decoder(); console.log("In javascript language websocket client close !"); }; //websocket通信发生错误连接 websocket.onerror = function() { alert("websocket 服务器未启动!"); return ; } } /*************************************************************** webgl渲染yuv420p数据 **************************************************************/ function showYuv420pdata(width, height, data) { let ylenght = width*height; let uvlenght = width*height/4; if(!webglPlayer) { const canvasId = "canvas"; canvas = document.getElementById(canvasId); webglPlayer = new WebGLPlayer(canvas, { preserveDrawingBuffer:false }); } webglPlayer.renderFrame(data, width, height, ylenght,uvlenght); } /*************************************************************** webgl方法实现全屏功能 **************************************************************/ function fullscreen() { if(!webglPlayer) { alert("没有渲染webgl暂时不能全屏"); return; } webglPlayer.fullscreen(); }七.结果

由于2020年6月份做的,当时忘记截图记录了,没有图片。说下这种方案实现效果,测试结果。

解码1080p 30fps的H265码流, 延迟300~450ms,

解码4k 30fps的H265码流,播放越久延迟越重,不行。适合1080p分辨率以下的。